Reply To: ChatGPT et al

Home › Forums › The nature of inquiry and information literacy › ChatGPT et al › Reply To: ChatGPT et al

Hi everyone, I’ve been meaning to return to this thread for a while. Ruth’s comment about Librarians being part of the conversation on AI is so important, and we do have to keep working to make sure our voices are heard here, with everything moving so fast.

Note that I’ve done so much thinking in the meantime that I’ve ended up with two quite long posts. One day I will learn to drop my thoughts in along the way to facilitate conversation better!

I still find trying to keep up with developments in AI exhausting (and often distracting), and I think part of the problem is that as information professionals we have a responsibility to think through the practicalities and ethics of AI in a way that early adopter classroom colleagues don’t always seem to. It’s relatively easy to throw out a cool new toy for your class to play with, but much more time intensive to work out how trustworthy, ethical and effective that tool actually is (even down to just making sure that the age limits on the tool you are recommending are appropriate for the class you are recommending it to).

I’m also finding the rate at which new tools get monetised frustrating – for example we designed a Psychology inquiry using Elicit last year, but just as the inquiry launched they changed their model and put certain features behind paywalls, which ruined large parts of the inquiry. So I’m a bit wary of recommending or designing anything that uses specific AI tools because the market is still evolving very fast.

So how to deal with AI (which we must – our students are growing up in a world where it has become the norm, so we can’t just ignore it!)? We’ve gone down the route of general principles, teaching healthy scepticism and trying to help students to navigate what it is actually good at and what it appears superficially good at but isn’t.

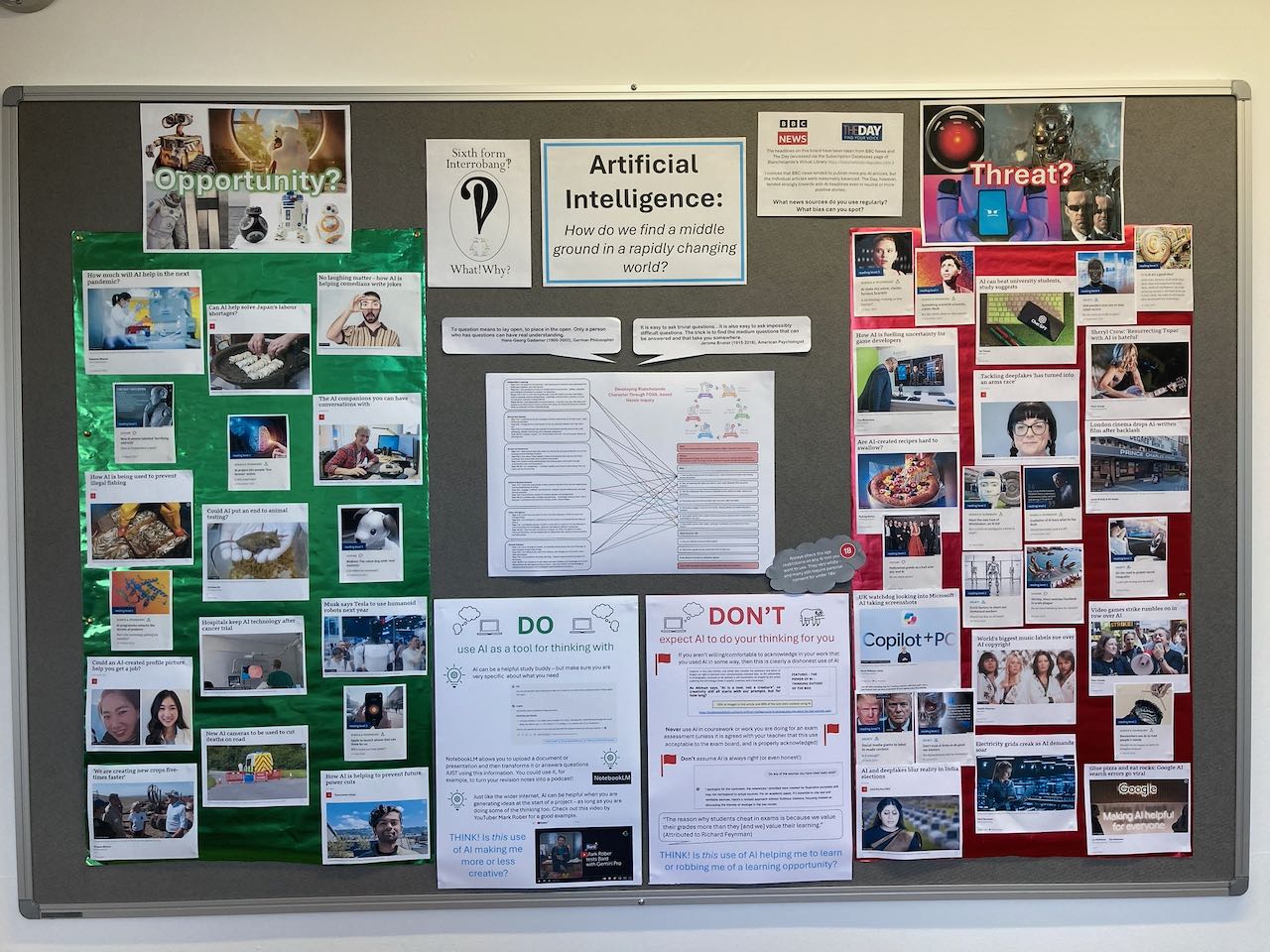

The image below shows a display I made at the start of term to support our Y12 Interrobang!? (inquiry-based Sixth Form induction) course. This is a six week course intended to prepare students for A-level study, and this year our inquiry focus was on AI and how the worlds of the different subjects they were studying were being impacted (positively and negatively) by AI.

If you zoom in, you’ll see that my advice on using AI tools boils down to:

- Do use AI as a tool for thinking with

- Don’t expect AI to do your thinking for you

- Ask yourself:

- Is this use of AI making me more or less creative?

- Is this use of AI helping me to learn or robbing me of a learning opportunity?

- Would I be comfortable to admit this use of AI (or might it get me into trouble)?

That last one is something I will also say to staff when we run a twilight INSET on AI in the classroom in a couple of weeks. As educators we have a duty to be transparent with students and parents, so if a teacher uses a site like Magic School AI to help them to write a letter about a school trip, or asks ChatGPT to generate some exam style questions then they should publically declare that. If we are embarrassed to admit our own use of AI then that is a clear indicator that we should not be using AI for that task. We cannot tell students that they are cheating if they use AI without declaring it, but then not hold ourselves to the same standard. These are the opportunities we need to be embracing to open up the conversation about how AI works and what it is good for (or not).

One example I would give of a potential positive use of AI is for a student who is making a website as an HPQ artefact. He isn’t particularly artistic, and art is not the focus of the website but he does need some copyright cleared images he can use to make his site more attractive and fit for purpose. I think that an AI image generator might be a good source for those images. The images are important to his product, but he is not being assessed on his ability to produce them himself, and he knows his topic well enough to know whether the images he produces are appropriate, and to adjust them if not. This use of AI would make him more creative than he could be on his own, is part of his learning experience and would be fully acknowledged.

Another example that I really wanted to love, but find myself feeling very ambivalent about is Notebook LM, which I will address in the next post.