Reply To: ChatGPT et al

Home › Forums › The nature of inquiry and information literacy › ChatGPT et al › Reply To: ChatGPT et al

Notebook LM

(This is the second of two posts – the first (above) is more generally about how I’m trying to deal with AI in school at the moment, this one is my experience of Notebook LM)

One of my issues with students using AI for revision and research is that AI still tends to hallucinate, and it can be difficult to tell where it gets its information from (although Google Gemini and Bing are better at providing examples of sources containing information similar to their answers than Chat GPT). If you don’t already know a fair bit about your topic it can be hard to tell whether the answer Is trustworthy – and if you did know a lot about the topic you probably wouldn’t be doing the research in the first place!

So Google’s Notebook LM felt like a bit of a gamechanger in this respect because initial claims suggested that you could upload a source (or several) and it would find answers just from those sources. A number of reviewers I saw enthused about its ability to synthesise sources and to spot patterns and trends. It seemed like it would be a safe bet for students wanting to do some revision by uploading their revision notes, or trying to understand a long, tricky research paper, so I thought I would give it a go.

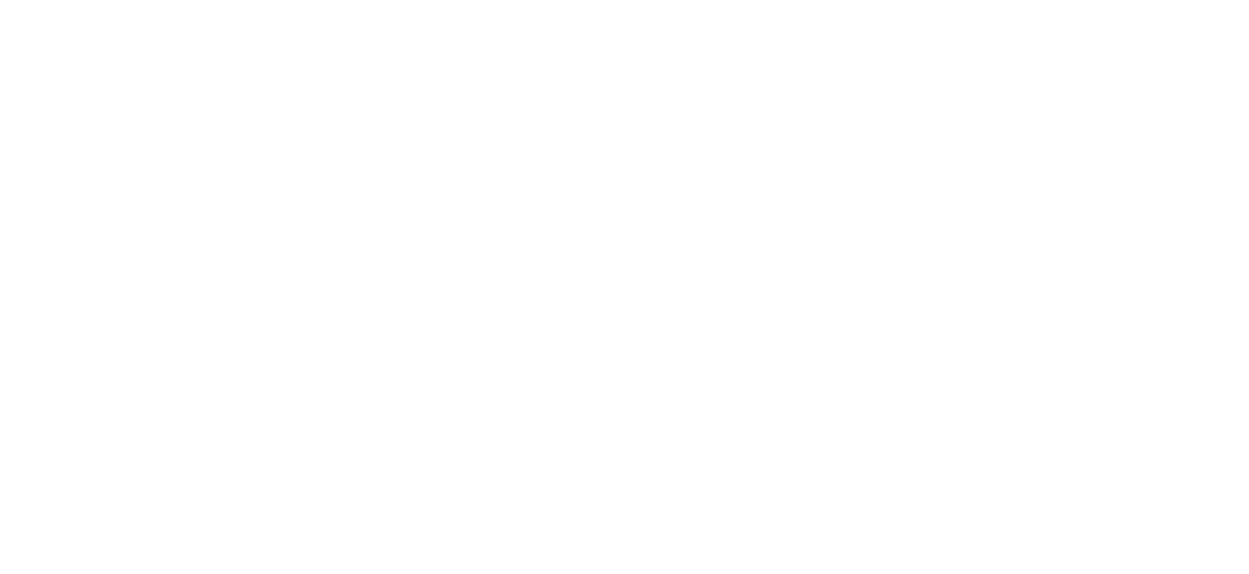

On first glance it is really impressive. I tried it with some notes from BBC Bitesize on the Norman Conquest for my Y7 son’s History revision. It offers options to produce FAQ, a study guide, table of contents, briefing document, timeline and a deep dive conversation (which is a podcast style conversational walk through of the source). It also generates a summary and suggested questions and you can have a chatbot conversation about your source. See image below. This was a fairly simple test of its abilities and all of the study notes looked pretty good – the kind of thing a student might produce for themselves but perhaps better (although I would argue that part of the value in producing these kind of revision notes is the work you do to produce them, so I’m not sure how helpful it would be for revision if the computer did it for you).

Where it started to get a little weird was the Deep Dive Conversation option. My 11-year-old son and I initially found it a little creepy how real this sounded, but he was very engaged and it was a new way to get him to interact with this revision material, with two ‘hosts’ having a very chatty conversation about the lead up to the Battle of Hastings. I was starting to feel more comfortable with this as a tool…until suddenly towards the end of the conversation it started to wander off the material I had given it. There was nothing obviously inaccurate (this is a very well-known topic so I wouldn’t expect glaring errors) but it started to mention events that were not in the text I had provided (my text ended with the Battle of Stamford Bridge, but the conversation went on to discuss the Battle of Hastings) and it was very clear that the conversation was not fully generated from that text I had given it alone.

This was a real concern for me, as part of the draw of Notebook LM was originally that I could be sure where the information was coming from, so I tried it on something trickier and fed it our newly revamped school library webpage to see what it made of that: https://www.blanchelande.co.uk/senior/academic/libra/

Again the results were impressive. It produced an accurate summary and briefing notes. The timeline was pretty weird and inaccurate – but it would be because this material is not at all suited for producing a timeline, so that was easy to spot. The 20 minute Deep Dive conversation that it produced caused me some serious concerns though. In some ways this had a certain comedy value, as the hosts became enthusiastic cheerleaders for our school in particular and inquiry in general, it was also weirdly impressive how they appeared to ‘grasp’ the value of inquiry learning in a way that many of our sector colleagues still have not done – and this went beyond just parroting phrases I had used.

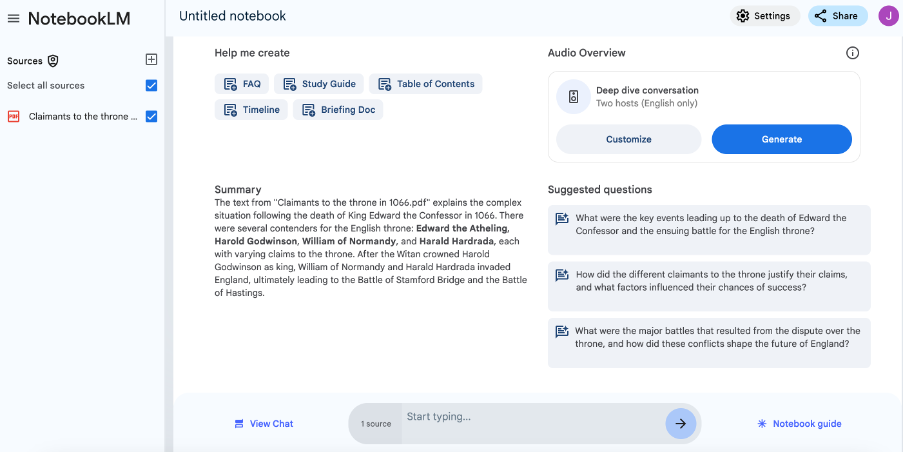

What REALLY troubled me though was that, because the page never actually said what FOSIL stood for, the AI completely made it up.

And it did it really well (try listening to the audio below from around about 2 minutes in). If we hadn’t known that it was talking complete nonsense there would have been no way to tell. According to Notebook LM, FOSIL stands for Focus, Organise, Search, Interpret and Learn (actually, as I am sure any regulars on this forum will know, it is Framework of Skills for Inquiry Learning). The AI then proceeded to give a detailed breakdown of this set of bogus FOSIL stages mapped onto the Hero’s Journey (which is mentioned on the site but I don’t give any details so that is also clearly drawn from outside). And it was really plausible – I might almost describe it as ‘thoughtful’ or ‘insightful’. But it was completely made up. Note also, that again, the final quarter of the conversation went way beyond the source I had provided and turned into a chat about how useful inquiry could be in everyday life beyond school. Very well done, and nothing too worrying in the content, but well off track from the original source.

EDIT: Deep Dive Conversation link – https://fosil.org.uk/wp-content/uploads/2024/10/Blanchelande-Library-1.mp3 (original link required users to have a Notebook LM login. This one is just an MP3 file).

It is this blend of reality and highly plausible fantasy that makes AI a really dangerous learning tool. What if a student were using this for revision, and reproduced those made up FOSIL stages on a test? Or if we were using it to summarise, analyse or synthesise research papers? How could we spot the fantasy, unless we already knew the topic so well that we didn’t really need the AI tool anyway?

I tried asking in the chat what FOSIL stood for because with the Norman Conquest source the chat had proved more reliable and wouldn’t answer questions beyond the text. This time though it said :

Which is also subtly wrong but in a different way.

I wanted to love Notebook LM because I thought it had solved the hallucination problem, and the problem of using unreliable secondary sources, but it turns out that it hasn’t.

Caveat emptor.

(Speaking of buyer beware – Google says “NotebookLM is still in the early testing phase, so we are not charging for access at this time.” and I’ve seen articles about a paid version for businesses and universities on the horizon, so I don’t expect it to remain free for too long. It’s just in its testing phase where Google needs lots of willing people to try it out and help them to improve it before they can market it. So, to complete my cliché bundle – “if you aren’t paying for it, you are not the customer but the product”. I’m aware how ironic it is that I am saying this on a free website, but my defence is that we are all volunteers who are part of a community that is building something together, and not a for-profit company! No customers here, only colleagues and friends.)